Difference between revisions of "Projects:BayesianMRSegmentation"

| Line 5: | Line 5: | ||

The aim of this project is to develop, implement, and validate a generic method for automatically segmenting MRI images. Towards this end, we design parametric computational models of how MRI images are generated, and then use these models to obtain automated segmentations in a Bayesian framework. | The aim of this project is to develop, implement, and validate a generic method for automatically segmenting MRI images. Towards this end, we design parametric computational models of how MRI images are generated, and then use these models to obtain automated segmentations in a Bayesian framework. | ||

| − | The model we have developed incorporates a ''prior'' distribution that makes predictions about where neuroanatomical labels typically occur throughout an image, and is based on a generalization of probabilistic atlases that uses a deformable, compact tetrahedral mesh representation. The | + | The model we have developed incorporates a ''prior'' distribution that makes predictions about where neuroanatomical labels typically occur throughout an image, and is based on a generalization of probabilistic atlases that uses a deformable, compact tetrahedral mesh representation. The model also includes a ''likelihood'' distribution that predicts how a label image, where each voxel is assigned a unique neuroanatomical label, translates into an MRI image, where each voxel has an intensity. |

Given an image to be segmented, we first estimate the parameters of the model that are most probable in light of the data. The parameter estimation involves finding the deformation that optimally warps the mesh-based probabilistic atlas onto the image under study, estimating MRI intensity inhomogeneities corrupting the image, as well as finding the mean intensity and the intensity variance for each of the structures to be segmented. Once these parameters are estimated, the most probable image segmentation is obtained. | Given an image to be segmented, we first estimate the parameters of the model that are most probable in light of the data. The parameter estimation involves finding the deformation that optimally warps the mesh-based probabilistic atlas onto the image under study, estimating MRI intensity inhomogeneities corrupting the image, as well as finding the mean intensity and the intensity variance for each of the structures to be segmented. Once these parameters are estimated, the most probable image segmentation is obtained. | ||

| Line 53: | Line 53: | ||

hospital and therefore make a difference for the treatment of the | hospital and therefore make a difference for the treatment of the | ||

patient. | patient. | ||

| − | |||

| − | |||

= Key Investigators = | = Key Investigators = | ||

Revision as of 18:46, 10 September 2009

Home < Projects:BayesianMRSegmentationBack to NA-MIC Collaborations, MIT Algorithms

Bayesian Segmentation of MRI Images

The aim of this project is to develop, implement, and validate a generic method for automatically segmenting MRI images. Towards this end, we design parametric computational models of how MRI images are generated, and then use these models to obtain automated segmentations in a Bayesian framework.

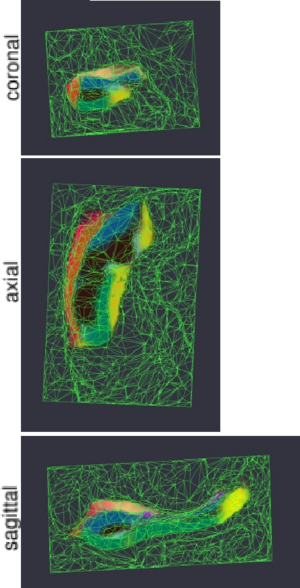

The model we have developed incorporates a prior distribution that makes predictions about where neuroanatomical labels typically occur throughout an image, and is based on a generalization of probabilistic atlases that uses a deformable, compact tetrahedral mesh representation. The model also includes a likelihood distribution that predicts how a label image, where each voxel is assigned a unique neuroanatomical label, translates into an MRI image, where each voxel has an intensity.

Given an image to be segmented, we first estimate the parameters of the model that are most probable in light of the data. The parameter estimation involves finding the deformation that optimally warps the mesh-based probabilistic atlas onto the image under study, estimating MRI intensity inhomogeneities corrupting the image, as well as finding the mean intensity and the intensity variance for each of the structures to be segmented. Once these parameters are estimated, the most probable image segmentation is obtained.

Model-Based Segmentation of Hippocampal Subfields in In Vivo MRI

Recent developments in MR data acquisition technology are starting to yield images that show anatomical features of the hippocampal formation at an unprecedented level of detail, providing the basis for hippocampal subfield measurement. Because of the role of the hippocampus in human memory and its implication in a variety of disorders and conditions, the ability to reliably and efficiently quantify its subfields through in vivo neuroimaging is of great interest to both basic neuroscience and clinical research.

The aim of this project is to develop and validate a fully-automated method for segmenting the hippocampal subfields in ultra-high resolution MRI data.

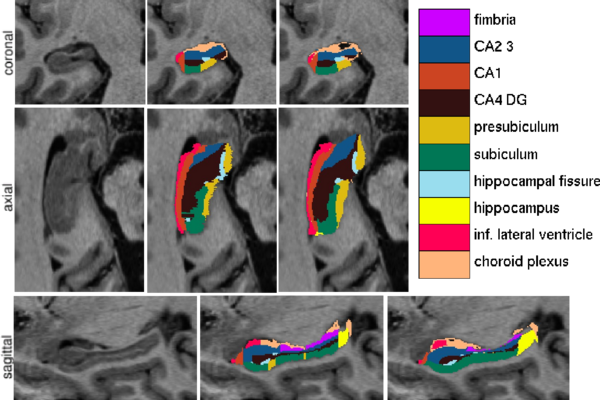

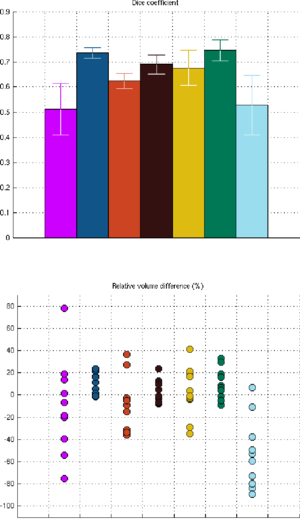

We have validated the proposed technique by comparing our automated segmentation results with corresponding manual delineations in ultra-high resolution MRI scans (voxel size 0.38x0.38x0.80mm^3) of 10 individuals. For each of seven structures of interest (fimbria, CA1, CA2/3, CA4/DG, presubiculum, subiculum, and hippocampal fissure), we calculated the Dice overlap coefficient, defined as the volume of overlap between the automated and manual segmentation divided by their mean volume. We used a leave-one-out cross-validation strategy, in which we built an atlas mesh from the delineations in nine subjects, and used this to segment the image of the remaining subject. We repeated this process for each of the 10 subjects, and compared the automated segmentation results with the corresponding manual delineations.

Figure 1 compares the manual and automated segmentation results qualitatively on a set of cross-sectional slices. The upper half of figure 2 shows the average Dice overlap measure for each of the structures of interest, along with error bars that indicate the standard errors around the mean. The lower half of the figure shows, for each structure, the volume differences between the automated and manual segmentations relative to their mean volumes. An example of our mesh-based probabilistic atlas, derived from nine manually labeled hippocampi, is shown in figure 3.

Integration of the Segmentation Methodology into Slicer

Segmentation algorithms based on the Expectation Maximization (EM) theory have proved themselves capable of results of high quality. Generally such results were obtained by carefully optimizing the parameters for a specific MRI protocol and a specific anatomical region. Besides the segmentation of a standard size MRI scan often requires a processing time in the order of minutes or hours. Because of these contraints, EM algorithms have found a limited usability in the clinical environment. Our project aims at addressing these issues and designing a new framework that would be easily trackable by a clinician. The background of our team encompasses Computer Science and Radiology. Our focus will be threefold, first to identify the bottlenecks of existing EM algorithms, second to validate the quality of our method on a collection of real life scans, and finally to provide an implementation intuitive enough that it could be accepted in the hospital and therefore make a difference for the treatment of the patient.

Key Investigators

- MIT: Koen Van Leemput, Sylvain Jaume, Polina Golland

- BWH: Steve Pieper, Ron Kikinis

Publications

In Print

In Press

- Automated Segmentation of Hippocampal Subfields from Ultra-High Resolution In Vivo MRI, K. Van Leemput, A. Bakkour, T. Benner, G. Wiggins, L.L. Wald, J. Augustinack, B.C. Dickerson, P. Golland, and B. Fischl. Hippocampus. Accepted for Publication, 2009.

- Encoding Probabilistic Atlases Using Bayesian Inference, K. Van Leemput. IEEE Transactions on Medical Imaging. Accepted for Publication, 2009.