Projects:PainAssessment

Contents

Introduction

Pain assessment in patients who are unable to verbally communicate with the medical staff is a challenging problem in patient critical care. This problem is most prominently encountered in sedated patients in the intensive care unit (ICU) recovering from trauma and major surgery, as well as infant patients and patients with brain injuries. Current practice in the ICU requires the nursing staff in assessing the pain and agitation experienced by the patient, and taking appropriate action to ameliorate the patient’s anxiety and discomfort.

The fundamental limitations in sedation and pain assessment in the ICU stem from subjective assessment criteria, rather than quantifiable, measurable data for ICU sedation. This often results in poor quality and inconsistent treatment of patient agitation from nurse to nurse. Recent advances in computer vision techniques can assist the medical staff in assessing sedation and pain by constantly monitoring the patient and providing the clinician with quantifiable data for ICU sedation. An automatic pain assessment system can be used within a decision support framework which can also provide automated sedation and analgesia in the ICU. In order to achieve closed-loop sedation control in the ICU, a quantifiable feedback signal is required that reflects some measure of the patient’s agitation. A non-subjective agitation assessment algorithm can be a key component in developing closed-loop sedation control algorithms for ICU sedation.

Individuals in pain manifest their condition through "pain behavior", which includes facial expressions. Clinicians regard the patient’s facial expression as a valid indicator for pain and pain intensity. Hence, correct interpretation of the facial expressions of the patient and its correlation with pain is a fundamental step in designing an automated pain assessment system. Of course, other pain behaviors including head movement and the movement of other body parts, along with physiological indicators of pain, such as heart rate, blood pressure, and respiratory rate responses should also be included in such a system.

Computer vision techniques can be used to quantify agitation in sedated ICU patients. In particular, such techniques can be used to develop objective agitation measurements from patient motion. In the case of paraplegic patients, whole body movement is not available, and hence, monitoring the whole body motion is not a viable solution. In this case, measuring head motion and facial grimacing for quantifying patient agitation in critical care can be a useful alternative.

Pain Recognition using Sparse Kernel Machines

Support Vector Machines (SVM) and Relevance Vector Machines (RVM) were used to identify the facial expressions corresponding to pain. A total of 21 subjects from the infant COPE database were selected such that for each subject at least one photograph corresponded to pain and one to non-pain. The total number of photographs available for each subject ranged between 5 to 12, with a total of 181 photographs considered. We applied the leave-one-out method for validation.

The classification accuracy for the SVM algorithm with a linear kernel was 90%. Applying the RVM algorithm with a linear kernel to the same data set resulted in an almost identical classification accuracy, namely, 91%.

Pain Intensity Assessment

In addition to classification, the RVM algorithm provides the posterior probability of the membership of a test image to a class. As discussed earlier, using a Bayesian interpretation of probability, the probability of an event can be interpreted as the degree of the uncertainty associated with such an event. This uncertainty can be used to estimate pain intensity.

In particular, if a classifier is trained with a series of facial images corresponding to pain and non-pain, then there is some uncertainty for associating the facial image of a person experiencing moderate pain to the pain class. The efficacy of such an interpretation of the posterior probability was validated by comparing the algorithm’s pain assessment with that assessed by several experts (intensivists) and nonexperts.

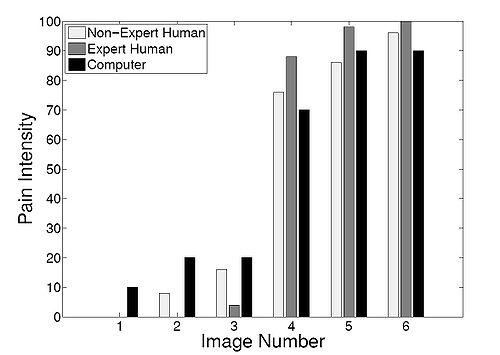

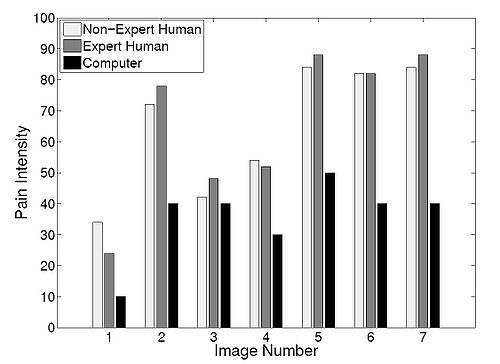

In order to compare the pain intensity assessment given by the RVM algorithm with human assessment, we compared the subjective measurement of the pain intensity assessed by expert and non-expert examiners with the uncertainty in the pain class membership (posterior probability) given by the RVM algorithm. We chose 5 random infants from the COPE database, and for each subject two photographs of the face corresponding to the non-pain and pain conditions were selected. In the selection process, photographs were selected where the infant’s facial expression truly reflected the pain condition—calm for non-pain and distressed for pain—and a score of 0 and 100, respectively, was assigned to these photographs to give the human examiner a fair prior knowledge for the assessment of the pain intensity.

Ten data examiners were asked to provide a score ranging from 0 to 100 for each new photograph of the same subject, using a multiple of 10 for the scores. Five examiners with no medical expertise and five examiners with medical expertise were selected for this assessment. The medical experts were members of the clinical staff at the intensive care unit of the Northeast Georgia Medical Center, Gainesville, GA, consisting of one medical doctor, one nurse practitioner, and three nurses. They were asked to assess the pain for a series of random photographs of the same subject, with the criterion that a score above 50 corresponds to pain, and with the higher score corresponding to a higher pain intensity. Analogously, a score below 50 corresponds to non-pain, with the higher score corresponding to a higher level of discomfort. The posterior probability given by the RVM algorithm with a linear kernel for each corresponding photograph was rounded off to the nearest multiple of 10.

Publication

B. Gholami, W. M. Haddad, and A. Tannenbaum, Agitation and Pain Assessment Using Digital Imaging, IEEE Tran. Biomed. Eng. (in submission)

Key Investigators

Georgia Tech: Behnood Gholami, Wassim M. Haddad, and Allen Tannenbaum