Project Week 25/Intra-operative deformable registration based on dense point cloud reconstruction

Back to Projects List

Key Investigators

- Sara Moccia (Istituto Italiano di Tencologia, Italy, Politecnico di Milano, Italy)

- Roberto Cassetta (Politecnico di Milano, Italy)

Project Description

| Objective | Approach and Plan | Progress and Next Steps |

|---|---|---|

|

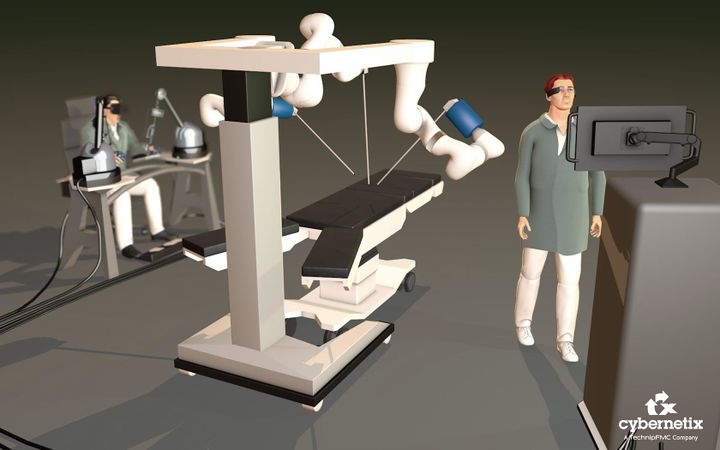

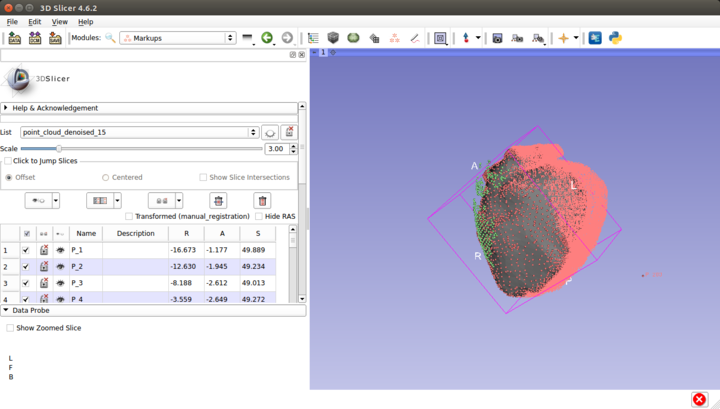

In this project, we aim at developing a feasibility study of a context-aware augmented-reality system for laparoscopic applications. The system combines pre-operative organ segmentation, pre-operative model generation, and intra-operative 3D reconstruction to automatize intra-operative registration of pre-operative organ models. This project is part of Smartsurg project (Giancarlo Ferrigno, Elena De Momi). This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 732515. |

|

|

Illustrations

Background and References

Laparoscopy allows performing surgery through few small incisions, reducing patient’s trauma and improving the surgical outcome. Despite the recognized medical benefits, it suffers from some limitations, which include limited maneuverability, reduced haptic, and limited field of view of the surgical scene [4]. Augmented Reality (AR) systems can attenuate some of these issues by providing an enhanced view of the surgical site. One of the main open technical challenges in this field is the initial cross-modality registration between the pre-operative planning (obtained with CT or MRI) and the intra-operative surgical scenario [5]. Dense 3D image reconstruction and image semantic analysis can be exploited to establish the cross-modality correspondences by automatically detecting and localizing organs in the 3D endoscope field of view [3]. Deformable registration can be then performed to register the pre-operative model into the reconstructed surgical scene.

- Yi Gao, Ron Kikinis, Sylvain Bouix, Martha Shenton, Allen Tannenbaum, A 3D Interactive Multi-object Segmentation Tool using Local Robust Statistics Driven Active Contours, Medical Image Analysis, 2012, http://dx.doi.org/10.1016/j.media.2012.06.002

- W. E. Lorensen, H. E. Cline. "Marching cubes: A high resolution 3D surface construction algorithm." SIGGRAPH '87 Proceedings of the 14th annual conference on Computer graphics and interactive techniques. Pages 163-169. ACM New York, NY, USA. 1987

- V. Penza, J. Ortiz, L. S. Mattos, A. Forgione, E. De Momi, "Dense soft tissue 3D reconstruction refined with super- pixel segmentation for robotic abdominal surgery." International journal of computer assisted radiology and surgery. 2016; 11(2):197-206.

- S. Bernhardt, S. A. Nicolau, L. Soler, C. Doignon, (2017). “The status of augmented reality in laparoscopic surgery as of 2016”, Medical image analysis. 2017; 37: 66-90.

- G. Taylor, J. Barrie, A. Hood, P. Culmer, A. Neville, and D. Jayne, “Surgical innovations: Addressing the technology gaps in minimally invasive surgery,” Trends in Anaesthesia and Critical Care. 2013; 3(2):56–61.

Acknowledgement

We would like to acknowled relevant contribution from Gregory C Sharp.